What is Quantum Computing?

If you’ve heard about the term “Quantum Computer” or not, you are in the right place. Because today, I’m going to show you a bunch of…

Quantum computers are a hot topic when discussing the future of computers, both in the short and long term. Separating the speculation from reality takes a careful eye, so I’m going to discuss what the current world of quantum computing looks like and what developments will be here in the future.

But first, let’s talk a bit of history, shall we?

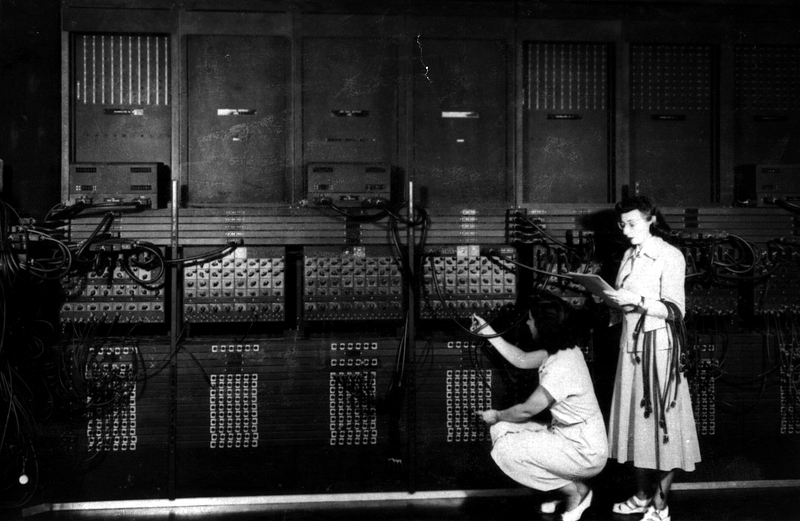

This is the ENIAC, which stands for Electronic Numerical Integrator and Calculator. This was the first digital computer used for general purposes, such as solving numerical problems. It was invented by J. Presper Eckert and John Mauchly at the University of Pennsylvania to calculate artillery firing tables for the United States Army’s Ballistic Research Laboratory.

A big pile of metal, with a lot of cables and switches, in which electricity runs.

Because of its high-speed calculations, the ENIAC could solve problems that were previously unsolvable. It was roughly a thousand times faster than the existing technology. It could add 5,000 numbers or do 357 10-digit multiplications in one second, isn’t that amazing?

Well, not so much right now… but it was transcendent in those years.

We’ve come a long way since then, computational power had been growing exponentially, whilst their occupied space was getting smaller. In fact, Gordon Moore predicted this in 1965, establishing his renown Moore’s Law, which states that:

The number of transistors per square inch on integrated circuits had doubled every year since the integrated circuit was invented. — Gordon Moore.

Moore predicted that this trend would continue for the foreseeable future. In subsequent years, the pace slowed down a bit, but data density has doubled approximately every 18 months. Most experts, including Moore himself, expect Moore’s Law to hold true until 2020–2025.

We are nearing our physical limits for data density, computer parts are right now, almost the size of an atom and reality itself is telling us something like this.

But why is this happening? let's get down to how it all works.

Computers use Bits, 1 and 0. All operations in a computer are just binary operations, using a bunch of bits we can do pretty much everything you now see in the modern era. We make all of these operations possible using transistors, which are very tiny gates (7nm approx.) whos only job is to allow or stop the flow of electrons from one side to the other.

Now bear with me, the problem with some of these switches becoming smaller than 7nm, is that manipulating electrons at nanoscale gets tricky, so Quantum Tunneling happens.

-Quantum what? — you might say

This just means electrons are passing through the barrier. Yes, they disappear and appear on the other side of it. But well, a barrier for us, might not be a barrier for them after all. This restraint is very real, it's a physical limit that we’ve just gotten to, adding up to that, we need to handle lots of data now more than ever, but have less space and less material available.

What if we could just manipulate the electron itself, wouldn’t it be amazing if we could just set and measure its spin?

Well lucky us, there are already researchers putting a lot of effort into doing this, helping develop the next-gen computing technology.

Entering Quantum Computing

A big pile of metal, with a lot of cables and switches, in which electricity runs.

Quantum computers approach the same computational problems in a new way, by using Qubits.

As opposed to Bits, which can be 1 or 0, Qubits can be 1 and 0. They are in a superposition state, which means, it is not deterministic until the moment you observe them. The way the Qubits are controlled right now is using microwave pulses, calibrated to know what kind of pulses, frequencies, and durations will put the Qubit into superposition or will flip the state of the Qubit. They are also measured with the same microwave signals, the key is actually coming up with an algorithm where the result is deterministic.

For example, 4 Bits means we can have 2⁴ configurations at a time, so 16 combinations in which we can only use one. For Qubits in superposition, on the other hand, we can have 2⁴ configurations at the same time.

That’s only 4 Qubits, but what if we could handle maybe 64 Qubits. This would mean 2⁶⁴ possibilities or 18,446,744,073,709,551,616 possibilities at the same time, this is worth 2.3 exabytes!

In comparison, if we have to cycle through each of these combinations, a regular computer at 2 billion operations per second (2Ghz) would take around 585 years.

Right now researchers have only been able to manipulate around 50 error corrected Qubits, which is not even near to what we need to start getting benefits out of this technology. But what if we could handle the states of millions of Qubits, what would we be able to do?

Well, the list is exhausting, but the most promising advances will come in fields that require tasks that handle lots of data or can benefit from heavy simulations, for example, searching and sorting a database, particle physics, weather forecasting, cryptography, chemistry, AI, protein folding, and much more, but even though quantum computers can be very powerful, they probably won’t replace our home computers, as for most computations a quantum computer would only be as fast as a classical computer(or slower), so in reality, they might just simply co-exist.

In Conclusion

Current computers will not go away anytime soon, and quantum computers still have a long way to go, however, as the first classic computers evolved over the years, we will see quantum technology slowly start to take shape and with the proper innovations in quantum algorithms and the nonstop research in particle physics, adoption is going to come to universities, then as the prices continue to drop, we’ll start to see large and medium-sized companies acquire the units, eventually it will be accessible to the public for mainstream use, it could definitely one day replace the technology our current devices use.